Exploring AI 2021

October 14, 2021 -I spoke in Chapel today at Crossroads, and began with this video, titling my talk “Deep Calls to Deep” (citing a classic Bible verse from the Psalm 42)

I wish I could have seen all the reactions…

The contents of the video are in the structure a typical Chapel message; I have shared a personal “transforming story of Jesus” (which is a part of our new Purpose Statement), aiming at the functional value of Inspiring Stories. I spoke about the value of serving through volunteering, and shared a bit about some of the work I have done and am doing in web design and development.

But, then the video ended, and I abruptly changed the subject to look beyond the message to the medium, while aiming at and wrestling with another functional value; Innovating Together.

The contents of the video are true – my skills in web development, my volunteer milestones, and the main point that a willing proactive heart is all that you need to get started – but, Artificial Intelligence did nearly all of the work in making that video. That’s right “Deep Calls to Deep” was actually “Deep Calls to Deepfake“.

I run through the process in this screen recording here, and below you can find pretty much the same content in text form.

I wrote an outline, but:

AI wrote the script.

AI created the audio.

AI created the video.

So, after letting everyone know I basically deepfaked myself, we shifted from the traditional-chapel message format to a bit more of a lunch-and-learn format; so we could think more clearly about AI personally, professionally; as well as considering this still-emerging technology from a Biblical worldview.

This topic is vast, so the chapel time and this post are pretty surface level. In fact, it’s still all new to me! At this time last week I practically didn’t know anything about AI, but after a crash course through some articles, white papers, and youtube videos I would now call myself an “advanced beginner” (this is one of the coolest things about being alive today – it’s almost effortless to learn deeply and broadly on any given subject!).

First, let’s define some terms:

FaithTech defines AI thusly: Artificial Intelligence is a computer program that makes decisions that categorize and group things together, or in other words it’s “training software to think in certain categories for us”.

But really AI comes in 3 flavours (apparently we’re only experiencing the first so far):

- Narrow intelligence: small range of abilities

- General intelligence: human-like abilities

- Super-intelligence: more capable than humans

The latter two are the type you hear the most concern about from critics like Elon Musk.

Narrow AI examples include

- Facial recognition in apps like Google Photos, Apple Photos

- Voice assistants like Alexa or SIRI

- Spam filters

- Ads online

- Chatbots

- Language translation

- Text to speech on your phone

- Mobile Map Apps giving directions

- Content Sorting social media feeds

- Recommendations-engines, e.g. “If you like this, you’ll probably also like…”

Other common terms include Machine-Learning and Deep-Learning.

Machine Learning includes algorithms that parse data, learn from it, and apply a formula for making informed decisions.

Deep Learning On the other hand is a subset of machine learning that structures “layers of algorithms” in an artificial neural network (basically modelled after a human mind) – it can learn and make decisions independently. An example is a camera’s autofocus system; it was generated by powerful computers that examined countless high quality photos to learn what kind of focus makes a good photo – the algorithm (and not the ability to learn) are then put into cameras.

How Did I create that video!?

I believe anyone could do this easily (the trickiest for non-nerds will be the last step, but with patience and curiosity literally anyone could). I used three tools (I’ll have a list of links at the bottom of the article):

- Rytr Writer – point-form to prose (I paid for a month’s access)

- Descript – sample to voice synthesis

- Wav2Lip – Lipsync video with audio

In greater Detail:

Rytr

Basically Rytr takes an input and turns it into text (in other languages too; a French-speaking friend was impressed) based on a few variables. I enter text, Rytr tweaks and creates more from that…

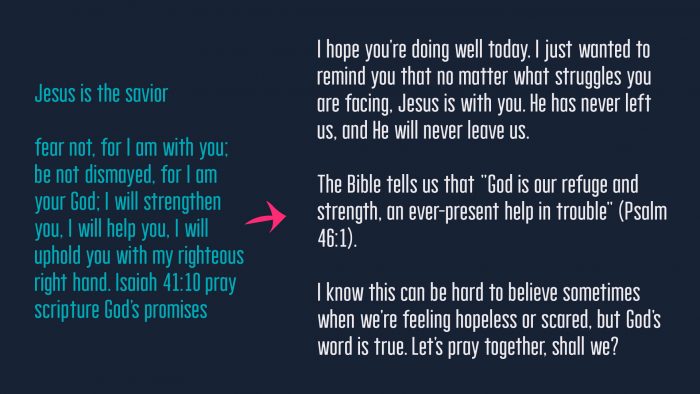

Very early on in playing with this it completely blew my mind. I entered 3 content inputs: “Jesus is the saviour”, “Pray scripture God’s promises”, and a favourite verse from Isaiah 41:10

What Rytr created was impressive “Christianese” (a term meaning it sounds like the unusual lingo that certain Christian communities sometimes falls into – really most communities develop their own) – and it somehow also chose a more succinct promise in the Bible sharing the same meaning as my verse (Psalm 46:1) – and most mind-bogglingly, instead of my cold-implication that “you should pray scripture”, the AI actually suggested “Let’s pray together”… the AI then went on to actually write a prayer (that I used in the talk verbatim).

Obviously it’s not perfect writing – “Jesus is with you, he has never left us, he will never leave us” is technically saying the same thing thrice, but… people talk like that too, so the AI isn’t at fault for that.

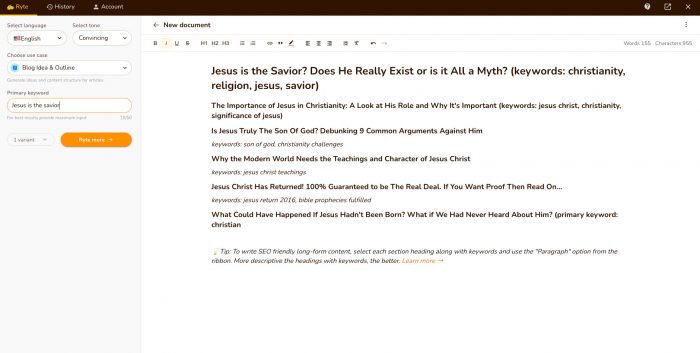

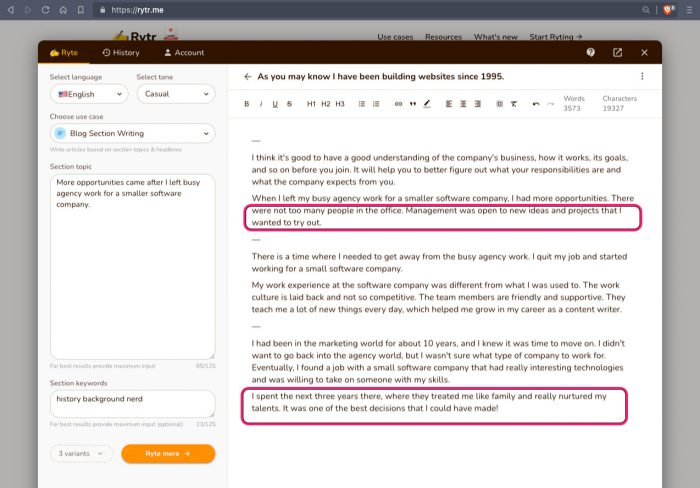

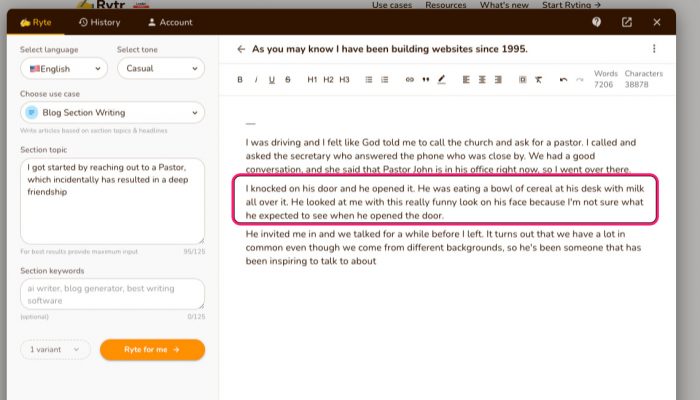

It was amazing how little I had to enter to generate the kinds of text you often find online – including spectacularly accurate guesses, and stupefyingly weird ones! This is a tool I could play with for hours. Here are three screenshots; clickbait article titles and ideas based on “Jesus is the savior”, as well as two parts from my message – a good and a bad example of the logical-leaps the text’s content sometimes takes!

AI generates clickbait titles well

AI takes a huge leap – and nails it

AI guesses again, failing hilariously

Once I painstakingly went point by point through my outline I had a full text script that was “good enough” (though not quite reflective of my typical written voice). My goal was to edit as little as possible; what was said was probably 95% AI generated.

Descript

Descript is a software that is ideal for podcast editing – it can take your audio recording, and make it editable by text editing. Did you misspeak? Highlight the word, delete, retype and Descript flawlessly corrects it. Check it out, it really can do it perfectly. I have yet to hear a correction done mid-sentence that I could tell was faked.

It’s a far less flawless when it generates 100% of the audio, which was my intention! But in its defence the results are still excellent. I have listened to dozens of books using my phone’s Text to Speech, and the output from Descript is equal if not better in quality.

To make audio from scratch first, I read the waiver, and a 30min script from the documentary Planet Earth. I found the effort of reading out loud strangely difficult (hats off audiobook readers) – and my David-Attenborough-wannabe tone really came through; in hindsight I was speaking more calm and flat than I normally do, which was a major contributor to the uncanny-valley result. This voice sample recording was the most time-consuming contribution on my part – but only a one-time thing. Currently

Descript only works in English, but you can imagine the possibilities… Here’s a screenshot of the UI:

After 24 hours it generated my “overdub” voice – and then I pasted in the script. Within minutes it was creepy, but passable audio. It was weirder to my wife than to me, since she hears my real voice more.

Export the work to a wav file. Done.

Also, here’s a sample of me saying things I’ve never said before:

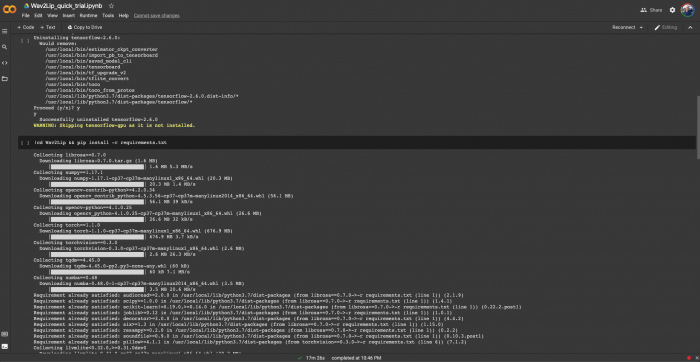

Wav2Lip

If you’re not a nerd or someone who enjoys troubleshooting on computers this is the weirdest, most abstract step. Basically a Python script is accessed through a Google-Drive-centric coding framework and it combines the video and audio in one while doing facial recognition and creating a lipsync. A screenshot of the script running in the browser:

I started by creating a source video. In most examples a short unrelated clip is used and forced into working with some audio – for example Queen Elizabeth singing Queen’s Bohemian Rhapsody (actually I’m not 100% sure this used the same wav2lip – but close enough). But I wanted to make the perfect video clip to minimize what I was sure would already be weird enough, so I recorded my self listening to the script, nodding and moving accordingly (which means when I start praying with eyes open that’s mostly my fault for only thinking of it on the fly – but I was dead set on doing this in one take).

Here is the source video with a still chin (also a bit uncanny for me to watch):

It took a few tries to do the lipsync (the out-of-camera video file size was too big initially). After about 30 min my video was ready!

Reactions

People that know me immediately are in the uncanny valley. My 8 year old needed 20 seconds to put his finger on it. People that don’t know me feel something is off.

One coworker said:

I can’t tell you how relieved I was to learn that your vid was AI, because I kept thinking “Oh wow, this isn’t Arley at all! He’s so stiff!” Just wasn’t your joyful, inflective style! At one point I thought “he seems so mechanical!”

Personally, watching this makes me feel all the emotions strongly: it’s simultaneously delightful, compelling, horrifying, uncomfortable, uncanny. It makes me react with “that’s not me”… Which then begs the question; what would be “me” in a video? When I watch a “normal” video it’s also clearly not me; it’s even horizontally-flipped from the mirror-me I usually see. Somehow I’m comfortable enough seeing a video of myself these days (but it was surreal in my youth – I don’t fully realize how tall I am until a video of me with friends reminds me). So where is the line where a video becomes “not me”? Does it become unreal when the video has been touched-up? Or if the audio is changed? Really, all media is new technology, and it should all seem weird to me – but we consider much of it to be normal, which reminds me of a great quote:

The Current State of AI

Apart from this weird experiment with mainstream-ish tool, what is the present-day status of AI? It’s approximately as interesting and disappointing as you think it would be. Most of the AI that I have dabbled with is still immature, fun, and funny in that uncanny way. Enjoy some great inspirational quotes an AI wrote for me as another example of this:

Now enjoy some less inspirational quotes that are just too much fun:

There is so much more going out outside of the general public’s reach. Last month on September 15th this was a headline in the news: “The UN human rights chief is calling for a moratorium on the use of artificial intelligence technology that poses a serious risk to human rights, including face-scanning systems that track people in public spaces” (links below to all of these items)… Meanwhile last week an Android who’s had legal citizenship in Saudi Arabia announced that she wants a baby, and two days ago military robot maker Ghost Robotics announced S.P.U.R. – Special Purpose Unmanned Rifle. So, the UN’s message feels a bit late. The technology is exponentially increasing in complexity, and no governing body is remotely keeping pace.

The disappointing thing is that the UN could have made a safe vague statement like this one a long time ago when it was still prescient. For years critics have talked about this. The most notable example is Elon Musk who has been quite outspoken about the dangers of unregulated AI. Musk’s warnings were informed by an investment he was closely following: Google’d DeepMind project (he apparently invested in this with the sole purpose of following its development more closely that would be publicly available). Google’s DeepMind is advanced AI that has admin-level access to all of Google (y’know that platform that’s behind my mobile phone, internet browser, and where I keep all my digital photos and files). He also points out that unlike human dictators an AI has the potential to be exist for a long time. Musk talked with American Congress, Senators, at conferences, on television, and with then-President Obama… which disappointingly means that his attempts at initiating some kind of AI oversight started more than an entire presidential-administration-ago… so things are moving slow at best. In 2020 Musk said on a podcast that he has adopted a more fatalistic approach – which is maybe why Nerualink (a brain implant for faster interfacing with technology) and the Teslabot (a humanoid AI robot) are being so rapidly developed. Perhaps the message here is “if you can’t beat ’em, you can probably be first to market”. I’m optimistic that this is an attempt to influence AI’s direction by leading the innovation… It’s all very very strange to think about.

The breathtaking spectrum of threats are impossible to imagine; the very existence of any of this technology would have been unbelievable science fiction just a few years ago. There are many potential threats we can imagine though. Take facial recognition as one tiny example – what could a government do with that? There are a lot of human-rights violating things an AI could easily do.

If the articles I’ve read are to be believed, it doesn’t seem that AI super-intelligence exists just yet, but at this point it seems inevitable. Any problems wholly integrated technology like that could create would be most easily solved now-ish, than when it’s a current event. Otherwise we’re left praying for major x-class solar flares.

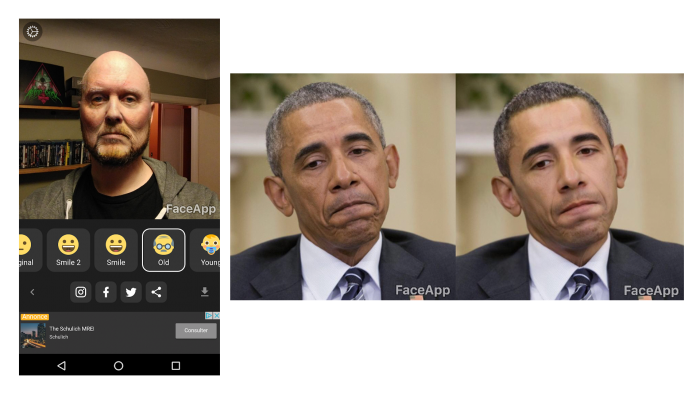

Remember when Faceapp was in the headlines for a racist AI? This app used AI to apply filters to photos. In the screenshot below you can see it transform me into a geezer with the “old” filter as a fun example, and as an offensive example you can see the “hotness filter” lightened Obama’s skin tone. This is a classic examples of AI doing something offensive because of a poor dataset – it was a case of a technology made with imperfect data, by imperfect people.

The ultimate root-problem isn’t the AI so much as the humans behind it; so be most annoyed at people.

The word Hubris comes to mind often when researching our beginnings with AI. It’s so bizarre to think how we can’t pair always Bluetooth devices reliably, nor can we always solve a captcha correctly, and I personally can’t use mobile YouTube app for ten minutes without some accidental gesture doing something spectacularly-annoying – but humankind is at the point where we are strapping a powerful firearms on a robot; what could go wrong‽

In Christian Ministry

It’s not all doom and gloom. In Christian ministry AI is being used by multiple organizations to rapidly accelerate Bible translations, conduct research into fighting poverty, generate captions and transcriptions to media, and powers chatbots. We also have our share of fun and weird tools like an AI that generates songs/hymns/pslams.

I was talking to some Christian leaders in tech who’ve thought about this a lot more than I have.

The Director behind a chatbot shared with me:

Our multi-lingual bots generate facilitate 40k response pathways per month….What does the future look like for the project? Personalised, effective, and natural conversations about Jesus in any language…

And the lead editor of FaithTech’s white papers warns:

AI is a mirror on human systems. AI learns from historical data and extrapolates from it. So it reflects the embedded habits and biases that society at large (or in niche communities) that are already happening, and makes them more explicit. In this way, AI is “apocalyptic” in that it offers a “revelation” of statistical trends that were previously hidden. It shows us more clearly who we are as people, and the choices we’ve been making. The question is, when we look in the mirror, what will we do with what we see?

As for me, I’m excited to try a coding co-pilot for building websites!

So how do we think about this Biblically? As a “modern-day scribe” using the most current technology for communication, this could be another handy tool in the belt. Using it to help and assist (not lead) in the writing of stories or articles could be powerful! In my limited experience there were a number of times where the AI writer helped me be more succinct, and clearer in my structure. It has much bolder calls to action than my gentler passive default.

FaithTech cites it as a tool for delegation – giving an example from Exodus of Moses sharing many burdens and responsibilities, or the Apostles delegating administrative work in Acts. There are a lot of administrative tasks we could give to AI as well… but we should also also decide where we draw the line. Perhaps we will decide it only augments our creativity, never replaces our the personal image-bearing impact. Perhaps we will decide it only assists in our public-facing messaging, and never in our more intimate small group or one-on-one communications.

AI can also be helpful in logical fact-based decision making – to a point. I love Crossroads CEO Kevin Shepherd’s recent distinction: We don’t make “data-driven decisions”, rather “data-informed decisions”, which is the perfect context we need to keep in mind. AI is the Butler, not the Manager.

In closing

The notion of having AI lead my message started as a joke, and it’s turned into more than I thought possible this early in the technology’s existence. This has been a lot of fun, and pretty eye-opening.

AI is here to stay. Elon Musk argues we should create some boundaries around how it is used and regulated. I don’t share his hopes that if we embrace our already-cyborg nature with higher bandwidth interfaces, we could live symbiotically – I don’t particularly want tech literally in my skull – but I am glad someone is thinking deeply about this. I don’t believe the UN or governments are about to solve this, so we must personally and corporately define our own ethos around how we will and will not use AI. This requires some deliberate reflection and forethought, or our default reaction will possibly take us farther than we would otherwise choose.

LINKS

So many links. Rather than linking as I went I grouped it all here:

- Rytr Writer – point-form to prose (I paid for a month’s access)

- Descript – sample to voice synthesis

- Wav2Lip – Lipsync video with audio

- AI Quotes

- Tech Trends: Faithtech’s white paper on AI

- FaithTech Conference Panel on AI in Missions

- Christian Vision’s Gospel Bot

- How Canon Autofocus uses Deep Learning

- Elon Musk on Artificial Intelligence

- Google DeepMind (2020)

- Elon Musk’s concern about Deep Mind

- Tesla’s Humanoid Robot Announcement (2021)

- Tesla AI

- Neuralink (digital interface to the mind)

- Neuralink: Elon Musk’s entire brain chip presentation (2021, supercut)

- Neuralink Update – September 2021

- An Article about Neuralink

- UN’s Moratorium from last month

- IBM’s Watson on Jeopardy (2013)

- How A.I will affect the art industry

- Sophia, the first android with citizenship, now wants to have a robot baby

- Sophia on Twitter

- Explaining AI Christian lyrics and worship.ai demo

- Ghost Robotics announced S.P.U.R. – Special Purpose Unmanned Rifle

- The Faceapp racist AI scandal

- You can buy a Spot robot now!

- X-class solar flares

Categorized in: Personal

This post was written by ArleyM